Biomechanics and Player Availability in the NBA

May, 2023

Introduction

With the advent of player tracking in the early 2010s, the NBA quickly advanced towards measuring biomechanics to optimize player performance and minimize injury risk, a movement that continues to pose ethical and financial implications – for example, in contract negotiations – as well as raising concerns about its effectiveness.

With the debate surrounding load management and apparent rise of major injuries, can we identify a causal link between the use of biomechanics and player availability?

Methodology: Causal Inference

Biomechanics were first implemented prior to the 2013-2014 season by four teams – San Antonio Spurs, Dallas Mavericks, Houston Rockets, and New York Knicks – and using publicly accessible data from Basketball-Reference.com from the 2013 and 2014 seasons, I applied the Difference-in-Differences regression model to infer causality on games played as a proxy for availability. I defined treatment as the use of biomechanics and/or wearable technology, and I assigned all players belonging to the four previously mentioned teams to the treatment group while the rest of the league was assigned to the control group.

Differences-in-Differences is a quasi-experimental statistical technique that compares treatment and control groups in two time periods – before and after treatment – in order to estimate the causal effect of treatment. More information available here.

Assumptions

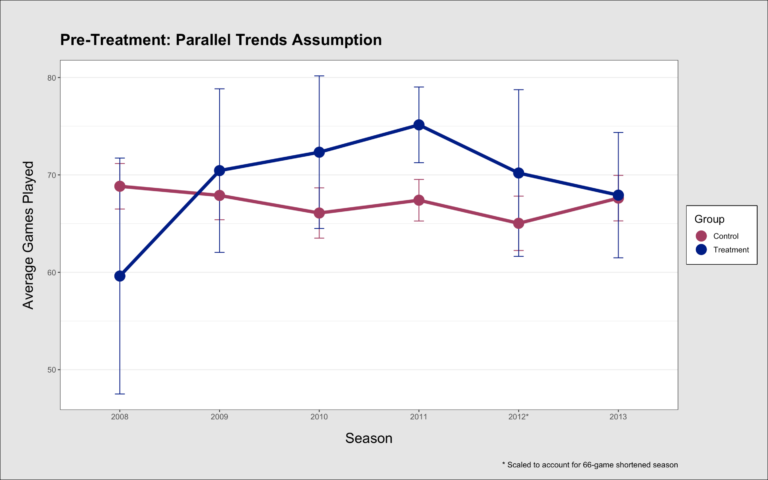

Difference-in-differences comes with multiple key assumptions of causal inference as well as perhaps the most important, which is the Parallel Trends Assumption, which states that in the absence of treatment, the treatment group and control group would have followed the same trend over time. This doesn’t necessarily require that both groups need to be equal, but that the difference between groups should remain relatively constant over time.

Though impossible to empirically check this assumption based purely on observational data, I justified the assumption by graphing the pre-treatment trend for the average number of games played for the control and treatment groups, pictured below. Note that the only players included are active and in the rotation; they had to have averaged at least 15 minutes played in both 2013 and 2014.

While the lines are not perfectly parallel, this can likely be attributed to a relatively small sample size; the treatment group contains between 6 and 13 players for each season while the control group contains between 161 and 188 players for each season. Also, intuitively, the control and treatment groups shouldn’t differ in practice, since any major events in the NBA theoretically affected each team equally – such as the 2011 Lockout. In other words, I assert that the only difference between the control and treatment groups is the treatment itself.

Regression Model

age : player’s age during the given season

lastGP : games played in the previous season

tech : dummy variable indicating if the player’s assignment group (0 for control, 1 for treatment)

time : dummy variable indicating if the given season was pre or post treatment (0 if 2013, 1 if 2014)

tech ⋅ time : dummy variable indicating if tech and time are both equal to one (diff-in-diff estimator)

We can now formally state the null hypothesis:

Results and Interpretation

The difference-in-differences regression model yielded statistically significant coefficients for the intercept, age, and lastGP, which makes sense since these potential confounders are being controlled for. The tech and time coefficients were both negative due to the fact that in the data, the average number of games played decreases between 2013 (69.9) and 2014 (66.8) for the control group, as well as between the treatment group (64.9) and control group (69.9) in 2013. This effect can be seen in the graph above, where the control group starts at a higher point than the treatment group in 2013 and is downward-sloping. The treatment group is upward-sloping due to the positive interaction coefficient, or the diff-in-diff estimator, represented by the dashed line connecting the actual treatment group outcome to the counterfactual outcome, assuming it were to follow an identical trend to the control group.

Despite an estimate of 7.89, we fail to reject the null hypothesis to a statistically insignificant p-value of 0.101.

Limitations

The first limitation of this project was the high amount of variation due to limited sample size and potentially outdated/incomplete data. Given the nature of working with historical observational data, I had no control over a relatively small sample size, but since I used data from over a decade ago, that means there is new data to be incorporated. The principal reason why I elected not to do so was the ambiguity surrounding exactly when each team began using biomechanics. It’s highly likely that the use of biomechanics has become a standard across all 30 teams today, but contractual obligations and privacy concerns have prevented exact dates of implementation from becoming public. With that said, estimating a treatment effect for 20-30 different team timelines, while controlling for other factors, would’ve been impractical and noisy.

A second and probably the most important limitation is the practice of load management, which is when a coaching staff will intentionally sit out a player in an attempt to decrease progressive physical stress and/or save energy for the postseason. Many teams, including the Spurs, who belonged to the treatment group, have been known to practice load management with their star players, often citing biomechanical data as the underlying motivation. Evidently, this poses complications to quantifying the effectiveness of biomechanics; it might seem counterintuitive that not playing corresponds with better availability, and if this is the case, games played would fail to be a sufficient measure by proxy.

Conclusion

Ultimately, I found no statistically significant causal link between the use of biomechanics and player availability, but the financial and strategic implications remain; if teams are willing to invest millions into player health, shouldn’t there be overwhelmingly positive effects? Furthermore, if the result of biomechanics is that players decide to sit out games, which subsequently lowers ticket sales and viewership, how should front offices react?

There’s also the ethical component, which has come to light in recent years in the NBA’s Collective Bargaining Agreement between the league and its players, which states that biomechanical data can only be used for “player health and performance purposes along with team on-court tactical and strategic purposes.” Although its use is banned in contract negotiation talks, there remains a gray area when it comes to physical evaluations that occur before the finalization of any transaction – free agent signings, trades, draftees. Evaluating for injury risk is a valid justification for athletic trainers to record biomechanical data, but this could fall under the umbrella of contract negotiation talks, especially if it becomes a substantial factor. Nonetheless, the application of this project spans all sports at every level when it comes to physical therapy and overall health, and its use and regulation will continue to be debated on and off the court.

Completed for DATA 25900 at the University of Chicago